[ad_1]

Strains and plasmids

Ribosome mutant SQ380 strains (B68 strain, 30S helical extension in h33a; C68 strain, 30S helical extension in h44; and ZS22 strain, 50S helical extension in h101) and all plasmids for initiation and elongation factors were a gift from the Puglisi laboratory16,17. The plasmid for overexpressing initiator tRNAfmet (pBStRNAfmetY2) was a gift from E. Schmitt56. The plasmids for E. coli RNAP (pVS10) and σ70 (pIA586) were purchased from Addgene (#104398 and #104399, respectively)57. The ybbR peptide tag (DSLEFIASKLA)58,59 was cloned into the pVS10 plasmid by introducing the ybbR DNA sequence with primers, followed by 5′-end phosphorylation with T4 PNK (New England Biolabs, NEB) and simultaneous blunt ligation of the plasmid using T4 DNA ligase (NEB). For the N-terminal ybbR mutant, the tag was inserted between E15 and E16 in the β′ subunit; for the C-terminal β′ mutant, it was inserted between E1377 and G1402, thereby deleting the region A1378–L1401. Gene sequences of ribosomal protein S1, NusA, NusG, NusG(NTD) (M1–P124), NusG(F165A), methionyl-tRNAfmet formyltransferase (MTF) and methionine tRNA synthetase (MetRS, truncated at K548 to obtain the monomeric form of the enzyme60) were cloned into a pESUMO vector backbone using Gibson assembly. Gene sequences were obtained from ASKA collection plasmids61.

Sample preparation

E. coli ribosomal subunits, initiation factor IF2 and elongation factors (EF-Tu, EF-G and EF-Ts) were prepared and purified as previously described16,17. Wild-type RNAP and mutant versions were purified as described57. Initiator tRNAfmet was prepared and purified following the protocol as described62. S1, NusA, NusG and NusG mutants were overexpressed as N-terminal fusions with His6–SUMO. They were purified by lysing the cells in IMAC buffer (50 mM Tris-HCl pH 8.0, 300 mM NaCl, 10–20 mM imidazole, 10 mM 2-mercaptoethanol) using a microfluidizer, loading the clarified lysate on 5 ml HisTrap HP columns (Cytiva) and eluting with increasing imidazole concentrations over 20 column volumes. Protein fractions were pooled and the fusion tag was cleaved overnight with His-tagged Ulp1 protease, while dialysing against IMAC buffer without imidazole. The cleaved tag was removed by a second HisTrap purification. Protein fractions were pooled, concentrated using 15 ml Amicon Ultracel 10 or 30 K concentrators and further purified via size exclusion chromatography using either HiLoad S75 (NusG/NusG(NTD)/NusG(F165A)) or HiLoad S200 (NusA, S1) columns (Cytiva) equilibrated with storage buffer (S1 in 50 mM HEPES-KOH pH 7.6, 100 mM KCl, 1 mM DTT and NusA, NusG or NusG mutants in 20 mM Tris-HCl pH 7.6, 100 mM NaCl, 0.5 mM EDTA/DTT). Protein fractions were pooled and concentrated using 15 ml Amicon Ultracel 10 or 30 K concentrators to ~100 µM. Aliquots were flash-frozen with liquid nitrogen and stored at −80 °C. In the case of MTF and MetRS, the preparation was done as described above with following changes (and adapted from refs. 62,63): (1) MTF IMAC buffer contained 10 mM K2HPO4/KH2PO4 pH 7.3, 100 mM KCl, 20 mM imidazole and 10 mM 2-mercaptoethanol; MetRS buffer contained 10 mM K2HPO4/KH2PO4 pH 6.7, 50 mM KCl, 20 mM imidazole, 100 µM ZnCl2 and 10 mM 2-mercaptoethanol. (2) After a second HisTrap purification, MetRS containing fractions were pooled and dialysed against storage buffer (10 mM K2HPO4/KH2PO4 pH 6.7, 10 mM 2-mercaptoethanol and 50% (v/v) glycerol). Aliquots were flash-frozen with liquid nitrogen and stored at −80 °C. (3) After a second HisTrap purification, MTF was further purified via a 5 ml HiTrap Q FF column (Cytiva) and eluted using a linear gradient of 20–100% into Q-sepharose buffer (10 mM K2HPO4/KH2PO4 pH 7.3, 500 mM KCl and 10 mM 2-mercaptoethanol). Protein fractions were pooled and dialysed against storage buffer (10 mM K2HPO4/KH2PO4 pH 6.7, 100 mM KCl, 10 mM 2-mercaptoethanol and 50% (v/v) glycerol). Aliquots were flash-frozen with liquid nitrogen and stored at −80 °C. An SDS–PAGE gel of all protein factors used in this manuscript is included in Extended Data Fig. 1j. All uncropped gels are presented in Supplementary Fig. 1.

Charging tRNAfmet and elongator tRNAs

Typically, initiator tRNAfmet (20 µM) was simultaneously charged and formylated in 800 µl reactions using 100 µM methionine, 300 µM 10-formyltetrahydrofolate, 200 nM MetRS and 500 nM MTF in charging buffer (50 mM Tris-HCl pH 7.5, 150 mM KCl, 7 mM MgCl2, 0.1 mM EDTA, 2.5 mM ATP and 1 mM DTT), incubating for 5 min at 37 °C (refs. 62,64). The fmet–tRNAfmet was immediately purified by addition of 0.1 volumes of sodium acetate (pH 5.2), extraction with aqueous phenol (pH ~ 4) and precipitation with 3 volumes of ethanol. The tRNA pellet was solubilized in ice-cold tRNA storage buffer (10 mM sodium acetate pH 5.2, 0.5 mM MgCl2) and further purified with a Nap-5 column (Cytiva) equilibrated with the same buffer. The eluate was aliquoted, flash-frozen with liquid nitrogen and stored at −80 °C. Elongator tRNAs were purchased (tRNA MRE600, Roche) and charged typically at 500 µM concentration in the presence of 0.2 mM amino acid mix (each), 10 mM phosphoenolpyruvate (PEP), 20% (v/v) S150 extract (prepared following ref. 65), 0.05 mg ml−1 pyruvate kinase (Roche) and 0.2 U µl−1 thermostable inorganic pyrophosphatase (NEB) in total tRNA charging buffer (50 mM Tris-HCl pH 7.5, 50 mM KCl, 10 mM MgCl2, 2 mM ATP and 3 mM 2-mercaptoethanol)29. Typically, 200 µl reactions were incubated at 37 °C for 15 min and then immediately purified as described above. To remove NTP contaminations introduced by the S150 extract, the aa–tRNAs were further purified over a S200 increase column (Cytiva), pre-equilibrated in tRNA storage buffer. The eluted fractions were combined, concentrated with 2 ml Amicon Ultracel 3K concentrators, aliquoted and flash-frozen with liquid nitrogen and stored at −80 °C. Charging efficiency (typically >90%) was verified with acidic urea polyacrylamide gel electrophoresis as described64.

Dye labelling of expressome components

Hairpin loop extensions of mutant ribosomal subunits were labelled with prNQ087–Cy3 or prNQ159–Cy3B (30S) and prNQ088–Cy5 (50S) DNA oligonucleotides, complementary to mutant helical extensions16,17,19 (see Supplementary Table 3 for all DNA and RNA oligonucleotide sequences). Just prior to the experiments, each subunit was labelled separately at 2 µM concentration using 1.2 equivalents of the respective DNA oligonucleotide by incubation at 37 °C for 10 min and then at 30 °C for 20 min in a Tris-based polymix buffer (50 mM Tris-acetate pH 7.5, 100 mM potassium chloride, 5 mM ammonium acetate, 0.5 mM calcium acetate, 5 mM magnesium acetate, 0.5 mM EDTA, 5 mM putrescine-HCl and 1 mM spermidine). RNAP–ybbR mutants (core enzyme) were labelled by mixing 7 µM RNAP, 14 µM SFP synthetase and 28 µM CoA-Cy5 dye in a buffer containing 50 mM HEPES-KOH pH 7.5, 50 mM NaCl, 10 mM MgCl2, 2 mM DTT and 10% (v/v) glycerol66. Typically, 100 µl reactions were incubated at 25 °C or 37 °C for 2 h and analysed on denaturing protein gels. The holoenzyme was formed by incubating 1.11 µM Cy5-labelled RNAP with 3 equivalents σ70 for 30 min on ice in RNAP storage buffer (20 mM Tris-HCl pH 7.5, 100 mM NaCl, 0.1 mM EDTA, 1 mM DTT, 50% (v/v) glycerol). Aliquots were stored at −20 °C. SFP and free dye were removed on imaging surface prior to experiments.

DNA templates were purchased (TwistBioscience) and amplified via PCR using p0030 forward and p0075 reverse abasic primers, generating single-stranded 5′ overhangs for both DNA strands15. The fragments were purified on 2% agarose gels, extracted using a QIAGEN gel extraction kit and buffer exchanged in e55 buffer (10 mM Tris-HCl pH 7.5 and 20 mM KCl) using 0.5 ml Amicon Ultracel 30 K concentrators. The 5′ overhang of the template DNA was hybridized by mixing with 1.2 equivalents of p0088–2×Cy3.5 DNA oligonucleotide at 68 °C for 5 min, followed by slow cool down (~1 h) to room temperature.

Single-round in vitro transcription assays

Stalled TECs were assembled in transcription buffer (50 mM Tris-HCl pH 8, 20 mM NaCl, 14 mM MgCl2, 0.04 mM EDTA, 40 µg ml−1 non-acylated BSA, 0.01% (v/v) Triton X-100 and 2 mM DTT) as described previously15,67. In brief, 50 nM DNA template was incubated (20 min, 37 °C) with four equivalents of RNAP in the presence of 100 µM ACU trinucleotide, 5 µM GTP, 5 µM ATP (+150–300 nM 32P α-ATP, Hartmann Analytic), halting the polymerase at U24, to prevent loading of multiple RNAPs on the same DNA template. Next, re-initiation of transcription was blocked by addition of 10 µg ml−1 rifampicin. The RNAP was walked to the desired stalling site by addition of 10 µM UTP and incubating at 37 °C for 20 min. The 30S ribosomal subunit was loaded for 10 min at 37 °C by incubating 25 nM stalled TEC with 250 nM 30S (B68 mutant, pre-incubated with stoichiometric amounts of S1 protein for 5 min at 37 °C) in the presence of 2 µM IF2, 1 µM fmet–tRNAfmet and 4 mM GTP in polymix buffer with 15 mM magnesium acetate. To enrich for fully assembled ribosome–RNAP complexes, the expressome was purified via immobilizing the 50S subunit (ZS22) onto streptavidin magnetic beads (NEB). For this, the 50S subunit was pre-annealed on h101 with prNQ302-prNQ303-prNQ304-p0109–biotin–DNA oligonucleotide. The prNQ303 and prNQ304 DNA oligonucleotides contain a BamHI cleavage site to elute the purified ribosome–RNAP complex from streptavidin beads. 50S loading onto the stalled TEC/30S PIC occurred simultaneously while immobilizing the ribosome–RNAP complex on streptavidin magnetic beads (pre-equilibrated with polymix buffer with 15 mM magnesium acetate) for 10 min at room temperature. Typically, 50 µl beads were loaded with a total volume of 150 µl ribosome–RNAP complex. The immobilized complex was washed once with polymix buffer with 15 mM magnesium acetate and then eluted with 100 µl polymix buffer with 15 mM magnesium acetate, while cleaving with BamHI for 20 min at 37 °C. The eluate was chased with 50 µM NTPs in the presence or absence of Nus factors (at 1 µM each, when present), 4 mM GTP, polymix buffer with 15 mM magnesium acetate and 100 mM potassium glutamate. Time points were taken before NTP addition (t = 0 s) and at 10, 20, 30, 40, 60, 90, 120, 180, 240, 360 and 600 s for each condition, mixing 2.5 µl sample with 5 µl stop buffer (7 M urea, 2× TBE, 50 mM EDTA, 0.025% (w/v) bromphenol blue and xylene blue) and incubated at 95 °C for 2 min. Single-round transcription assays with 70S in trans were performed as described above with minor changes. Ribosomal subunits were loaded at 1 µM concentration on 8 µM 6(FK) mRNA17 to ensure that 70S PIC formation was complete. Stalled TEC was added, and the reaction was chased with 50 µM NTPs (each) in the presence of 1 µM NusA, having final concentrations of 350 nM 70S PIC and 6.25 nM stalled TEC. As size reference, the ssRNA ladder (NEB, sizes: 50, 80, 150, 300, 500 and 1,000 nt) was 5′ end labelled with 32P γ-ATP. The reactions were analysed on 6% denaturing PAGE (7 M urea in 1× TBE), running in 1× TBE and 50 W for 2–3 h. Gels were dried, exposed overnight on a phosphor screen (Cytiva, BAS IP MS 2040 E) and imaged using a Typhoon FLA 9500. Band intensities (P) were integrated using ImageLab 6.1 software (Bio-Rad) and divided by the total RNA per lane (T) to compensate for pipetting errors, as described68. Normalized band intensities (P/T) were plotted as function of time. Pause-escape lifetimes were fitted as described68 by plotting ln(P/T) against time and fitting the pause-escape data range (indicated in plots) with a linear equation (y = mx + b, with m being the pause-escape rate). All uncropped gels are presented in Supplementary Fig. 1.

Single-molecule transcription–translation coupling assays

Stalled TECs were formed as described for single-round transcription assays with following changes: (1) Typically, labelled DNA was used (pre-annealed with p0088–2×Cy3.5). (2) Stalled transcription was performed in the presence of 10 µM ATP and 10 µM GTP. (3) Re-initiation of transcription was blocked with 1 mg ml−1 heparin (final concentration). (4) For immobilization, the 5′ end of the nascent mRNA was labelled with a biotin–DNA oligonucleotide (prNQ127-p0109–biotin). (5) When necessary, Cy5-labelled RNAP was used (N-terminal ybbR tag or C-terminal ybbR tag). Typically, ribosomes (250 nM 30S, 500 nM 50S) were loaded for 5 min at 37 °C by incubating 4 nM stalled TEC in the presence of 2 µM IF2, 1 µM fmet–tRNAfmet and 4 mM GTP in polymix buffer with 15 mM magnesium acetate. The loading reaction was diluted to 100–800 pM stalled TEC concentration (DNA template-based) with an IF2-containing (2 µM) polymix buffer at 15 mM magnesium acetate and immobilized on biotin–PEG functionalized slides coated with NeutrAvidin for 10 min at room temperature69. Unbound components were washed away with an IF2-containing (2 µM) imaging buffer (polymix buffer with 15 mM magnesium acetate, 100 mM potassium glutamate) and imaging was immediately started. The imaging buffer was supplemented with an oxygen scavenger system (OSC), containing 2.5 mM protocatechuic acid and 190 nM protocatechuate dioxygenase and a cocktail of triplet state quenchers (1 mM 4-citrobenzyl alcohol, 1 mM cyclooctatetraene and 1 mM trolox) to minimize fluorescence instability. For equilibrium experiments, the imaging buffer in addition contained 4 mM GTP and 1 µM Nus factors (each, exact composition indicated in figures). For real-time experiments, the reaction was initiated while imaging (after 10–30 s), with delivery of 50 nM 50S–Cy5 (where applicable), 2 µM IF2, 10–500 nM EF-G (where applicable, in specified concentrations, typically 50 nM), 100–1500 nM ternary complex (where applicable, in specified concentrations, typically 150 nM), 10–1,000 µM NTPs (each, where applicable, in specified concentrations) and 1 µM Nus factors (each, where applicable) in the same imaging buffer containing additional 4 mM GTP. The ternary complex was prepared as described previously70,71. For 2-colour translation experiments, a second delivery mix was injected containing 10% of the initial OSC (in imaging buffer) to actively induce photobleaching after 20 min of movie acquisition.

Ribosomal subunit fluctuations

Spontaneous intersubunit rotations can complicate analysis and assignment of real translation transitions22. Therefore, we chose experimental conditions in which we can minimize the interference from spontaneous intersubunit rotations. We are using polymix buffer, which was shown by the most recent study by Ermolenko and colleagues22 to reduce the fraction of ribosomes showing spontaneous fluctuations by 20-fold with only 1% of the h44–Cy3/H101–Cy5 ribosomes showing spontaneous fluctuations22. Furthermore, we chose slower translation conditions in which the timescale of the spontaneous intersubunit rotations is faster (0.3–2 s−1; see ref. 22) and not even all spontaneous fluctuations may be detected at our frametime of 200 ms. The difference in timescales between real translations and intersubunit fluctuations is especially pronounced during ribosome slowdown for which the timescale of translation and spontaneous intersubunit rotations can differ by two orders of magnitude.

Single-molecule instrumentation and analysis

We performed all single-molecule experiments at 21 °C using a custom-built (by Cairn Research: https://www.cairn-research.co.uk/), objective-based (CFI SR HP Apochromat TIRF 100×C Oil) total internal reflection fluorescence (TIRF) microscope, equipped with an iLAS system (Cairn Research) and Prime95B sCMOS cameras (Teledyne Photometrics). For standard TIRF experiments (2 or 3 colours), we used a diode-based (OBIS) 532 nm laser at 0.6 kW cm−2 intensity (on the basis of output power). The fluorescence intensities of Cy3, Cy3.5 and Cy5 dyes were recorded at exposure times of 200 or 300 ms. For alternative laser excitation32 (ALEX) experiments, we operated the 532 nm laser at 0.73 kW cm−2 intensity and in every alternate frame, illuminated the samples with a diode-based 638 nm laser (Omicron LuxX) at 0.12 kW cm−2 intensity (200 ms exposure time for each laser, resulting in a frame rate of approximately 2 frames per second). Typically, 10 min movies were recorded. For determination of FRET efficiencies, longer movies (20 min) were acquired to ensure photobleaching of both dyes.

Images were acquired using the MetaMorph software package (Molecular Devices) and single-molecule traces were extracted using the SPARTAN software package (v.3.7.0)72. Subsequent analysis was done using the tMAVEN73 software (when applicable) and with scripts19,74 written in MATLAB R2021a and previous versions (MathWorks). Data evaluation in brief: three-colour data was assigned by thresholding, while two-colour data was assigned by HMM, with both approaches detailed in the following. Data for Fig. 2 were both recorded as three-colour data (as presented in Fig. 2b,c) and also repeated using two-colour data (data presented in Fig. 2d–f are combined data from two-colour and three-colour replicates; see Source Data).

-

(1)

For two-colour translation experiments (30S–Cy3 or 30S–Cy3B and 50S–Cy5; Fig. 2), molecules were selected in SPARTAN that had a single photobleaching step for donor and acceptor dye. Those traces were baseline corrected, corrected for donor emission bleedthrough and the apparent sensitivity of each fluorophore. Subsequently, traces were exported to tMAVEN. The evaluation windows were selected from initial 50S subunit joining until: (a) photobleaching of one of the dyes occurred, (b) one dye entered a dark state; or (c) distorted fluorescence intensities that made further assignment difficult. Transitions were detected and assigned to two states using HMM (composite → vbHMM + Kmeans). For downstream evaluation (Extended Data Fig. 1c–f), those assignments were exported to MATLAB. First, HMM assignments were visually inspected and manually corrected for anti-correlated intensity changes of both dyes (real FRET transitions; see top trace in Extended Data Fig. 1d). Occasionally, intermediate FRET states were encountered that were corrected to high-FRET states using thresholding. For example, for Cy3B, this manual correction reduced the final average transitions per trace from 14 to 12 for elongating conditions and from 5 to 4 for colliding conditions. Second, traces were corrected for spontaneous subunit fluctuations of the rotated state, encountered especially under colliding conditions (see Extended Data Fig. 1e). To distinguish rotated state fluctuations (1–2 s timescale22) from real translations (non-rotated state median (3 replicates) = 12.8 ± 4.5 s, when using 150 nM aa–tRNA) we used dwell times for non-rotated states in elongating conditions as a threshold to cutoff non-translations (see Extended Data Fig. 1c–e). For this, we determined the 5% tile of all non-rotated state dwells in elongating conditions. Whenever 2 consecutive non-rotated dwells were encountered that were both shorter than the 5%-tile threshold (2.18/0.936 s; probability for 2 consecutive non-rotated dwells with <5%-tile duration to occur under normal translation conditions is 0.25%), translation count was stopped by putting those 2 dwells and all subsequent non-rotated state HMM intensities to rotated state HMM intensities (see Extended Data Fig. 1e, top trace). After that, also very short non-rotated dwells (<1%-tile; 0.936/0.5834 s) were removed. The last translation events in each trace were photobleaching-limited. We kept those in for data evaluation, as they inform on ribosome stalling after collision with the RNAP or after encountering the stop codon.

-

(2)

For three-colour translation experiments (30S–Cy3, DNA–Cy3.5 and 50S–Cy5; Fig. 2), HMM assignment was not possible, due to spectral bleedthrough between channels (Fig. 2b,c; see also Extended Data Fig. 7c,d). Therefore, FRET transitions were assigned by use of trace-specific thresholds, selecting only for productive FRET states (high-FRET to low-FRET state or vice versa; Extended Data Figs. 1d,e and 7c,d and supplementary figure 11 in ref. 15). For the box plots shown in Fig. 2e, the following numbers of molecules/dwells were used (see also Extended Data Fig. 2c). Numbers for the first 6 amino acids (see Source Data for full list) are: elongating, non-rotated state: n = 315, 315, 313, 312, 306 and 296; elongating, rotated state: n = 315, 315, 312, 307, 298 and 283; colliding, non-rotated state: n = 307, 306, 302, 275, 213 and 137; colliding, rotated state: n = 307, 305, 293, 254, 187 and 117. Non-rotated and rotated state dwell times were used to calculate cumulative probability density functions of the observed data (ecdf, MATLAB) which were fitted to single-exponential functions in MATLAB (fit, using non-linear least squares methods). If initial double-exponential fitting of the data yielded a population that was represented less than 10%, the data was classified as following single-exponential kinetic behaviour74.

-

(3)

Two-colour equilibrium coupling experiments (Fig. 3) were acquired using ALEX. Expressome molecules were first selected for assembled expressomes (30S–Cy3 signal, and direct excitation signal of RNAP–Cy5) using SPARTAN. Next, traces were baseline corrected, corrected for the following: (a) donor emission bleedthrough, (b) direct excitation of the acceptor dye and (c) the apparent sensitivity of each fluorophore. Selected traces were exported to tMAVEN. The evaluation windows were selected to include only times, where the RNAP–Cy5 (direct) signal was alive for at least 100 s. To model the number of FRET states we used HMM (Global → vbConsensus + Model selection). The resulting number of states was used to assign FRET states using vbHMM + Kmeans. Selected evaluation windows and assignments were exported for downstream evaluation. FRET efficiencies were extracted with MATLAB using the evaluation windows and fitted to two or three gaussian distributions (depending on modelled states number) using maximum likelihood parameter estimation in MATLAB. For dwell time analysis, HMM assignments based on tMAVEN were visually inspected in MATLAB and corrected for true anti-correlated behaviour for donor and acceptor dyes. States with EFRET = 0 were assigned to uncoupled and all states with EFRET > 0 (loosely coupled and coupled) binned to a single coupled expressomes state. Recoupling rates were obtained by single-exponential fitting of cumulative dwell time distributions for dwells with EFRET = 0.

-

(4)

For three-colour real-time coupling experiments (Fig. 4; ribosome stalled on RBS, RNAP chased with NTP), assembled expressome molecules were selected (30S–Cy3 signal, DNA–2×Cy3.5 signal and in the case of ALEX, direct excitation signal of RNAP–Cy5) and assigned by thresholding, due to spectral bleedthrough between channels (see Extended Data Fig. 7c,d). When applicable, dwell times for transcription or coupling were extracted. For determination of the fraction of coupled molecules until the end of transcription, we assigned the characteristic signal (30S–Cy3/DNA–2×Cy3.5 FRET) and calculated: fraction coupled = (number of coupled molecules with 30S–DNA FRET)/(total number of expressome molecules).

-

(5)

For two- to three-colour transcription data evaluation, only traces with single expressome molecules (containing 30S–Cy3 (if present) and DNA–2×Cy3.5) were used. Transcription times were evaluated by assigning the time after reagent delivery until DNA template dissociation using trace-specific thresholds (Extended Data Fig. 7c,d). Average transcription times (Extended Data Fig. 1g) were obtained by fitting dwell time distributions to a convolution of a Gaussian (describes transcription) and exponential function (describes RNAP-stalling at the 3′ end before termination)15.

For representation, the single-molecule traces were smoothed by zero-phase digital filtering by 3 points using the filtfilt function in matlab, but unsmoothed data was used for data evaluation.

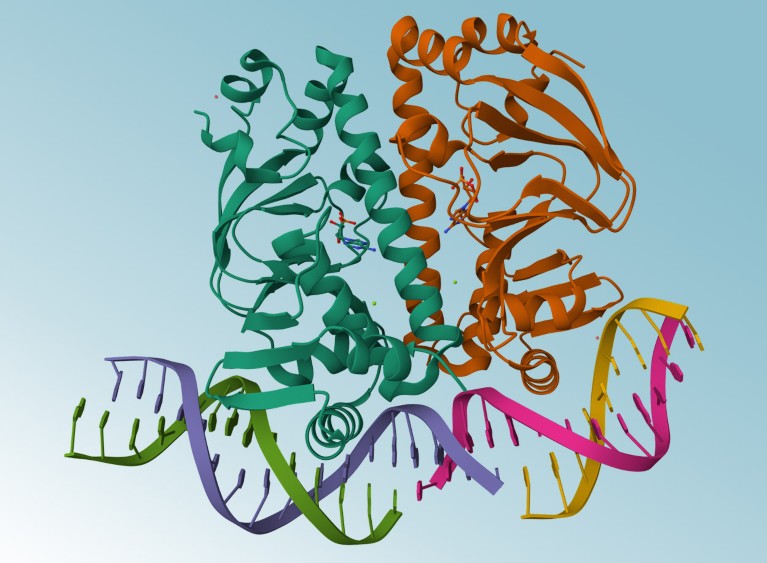

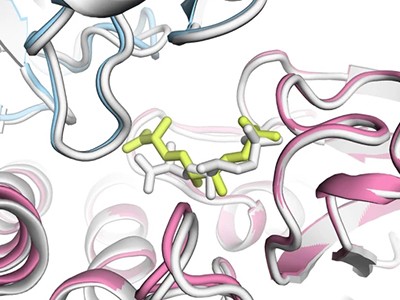

Structures were visualized with Pymol (v 2.4) or ChimeraX 1.3. All figures were prepared with MATLAB R2021a, Excel 2016 and Adobe Illustrator.

Statistical analysis

Reported error bars represent the s.d. of replicates as indicated in the figure captions. Errors in dwell time fits represent 95% confidence intervals obtained from fits to single-exponential functions as indicated. Statistical details of individual experiments, including number of replicates, analysed molecules or number of dwells used in the dwell time analyses are described in the manuscript text, figure legends, supplementary information or the Source Data. Every single-molecule experiment was performed on a different sample with two to three biological replicates (see details on number of replicates in figure captions and more information in Source Data file). P values were determined via two-sided Wilcoxon–Mann–Whitney test in MATLAB and are reported as *P < 0.05, **P < 0.01 and ***P < 0.001. Values and test statistic can be found in Source Data. In all box plots, the centre line is the median, box edges indicate 25th and 75th percentiles and whiskers extend to 1.5× interquartile range.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

[ad_2]

Source link